Hey folks! Or Weis here with another digest 💌

Trust, as we know it, is disappearing. Trust me.

In all seriousness - the more interconnected we become, the more difficult it is to determine what is real, what is authentic, and what is reliable.

Misinformation, deepfakes, and AI-generated content have blurred the lines between truth and manipulation, making it harder than ever to take anything at face value. In the past, trust was an implicit part of communication, identity, and access. Today, it must be constantly verified, reinforced, and re-evaluated.

I don’t want to get too political or philosophical here (I don’t think that’s what you came for) - instead, I want to focus on the implications of this in the sphere of technology.

Who are you?

The systems we rely on—from authentication to access control—are built on the assumption that trust can be assigned and maintained. But what happens when traditional trust models fail?

Most access control systems today operate under a static trust assumption: once an identity is authenticated, trust is granted, and permissions are assigned accordingly. This model worked well in a world where identity was relatively stable and difficult to forge.

We no longer live in that world.

Identity itself is becoming more complex. AI agents are now acting on behalf of users, creating a new type of identity—one that’s neither fully human nor fully machine. Identity fraud is easier than ever, and behavioral manipulation makes static access rules ineffective.

The problem isn’t just authentication; it’s the way we evaluate trust itself.

What can you do?

Instead of asking, "Who is this?" and assigning trust upfront, I suggest we ask, "What are they trying to do?" and "How much risk does this action introduce?"

Rather than assigning trust first, we need to establish:

A Trust Score for the Identity – A dynamic score that changes over time based on authentication factors, behavioral patterns, and past interactions.

A Risk Score for the Action – A predefined score that reflects the sensitivity and potential consequences of a given action.

Access decisions should not be based on identity alone but on cross-referencing trust and risk. A request is approved only if the identity's trust score meets or exceeds the risk score of the action.

This shift in perspective changes everything. Rather than treating trust as an initial evaluation, we can look at it as an emergent property—a result of accumulated interactions, contextual risk assessments, and behavioral analysis. This approach is particularly relevant in access control, where decisions about what a user, a machine, or an AI agent can do should be based not just on identity but on a dynamic evaluation of risk and past behavior.

To understand how this works in practice, let’s break down the problem, look at how existing models for ranking trust can be adapted, and explore how access control systems can be restructured to handle trust as a fluid, evolving attribute rather than a static assignment.

What Does Trust Look Like?

We tend to think of trust as a simple binary: something is either trustworthy or it isn’t. A user is either verified or they are not. A system either allows access or denies it. But in reality, trust is rarely that clean-cut. It exists on a spectrum, fluctuating based on context, behavior, and perceived risk.

A great example of this can be found in AI bot detection models. We wrote this a while ago and explained how, rather than labeling an entity as either “good” or “bad,” it’s possible to assign a dynamic trust score based on multiple signals. Some bots are clearly malicious, some are known to be beneficial, and many exist in a gray area in between.

Having ranked your bots, you can, quite easily, create a far more granular rule set that enforces what they can or cannot do:

Now, this approach isn’t just useful for bots—it’s exactly the kind of framework we need for defining trust in human users and system identities. Instead of a binary decision, we can assign a trust score that changes dynamically as new signals are evaluated.

This is the fundamental shift: trust is not something we assign in advance. It is something we allow to emerge from what an entity is permitted to do and how it behaves over time.

How Trust-Based Access Control Works

If we stop assigning trust before action, how do we structure access control?

The key is to treat trust as a measurable, evolving attribute rather than a fixed label.

When a user or system requests access, we evaluate two things:

The Trust Score of the Entity – Based on authentication strength, behavior, and history.

The Risk Score of the Action – The more sensitive the action, the higher the trust required to perform it.

A request is approved if the trust score meets or exceeds the risk score.

For example, in a financial system:

Low-risk action (viewing account balance) → Minimal trust required.

Medium-risk action (transferring $5,000) → Requires multi-factor authentication.

High-risk action (transferring $1,000,000) → Requires additional human approval.

This model eliminates the need for static trust assignments—instead, it enables real-time trust evaluation that dynamically adapts to risk levels.

Trust-Based Access Control

So how do we actually put this into practice? Instead of relying on static roles and predefined trust levels, I suggest introducing trust as a dynamic, measurable attribute in our access control system.

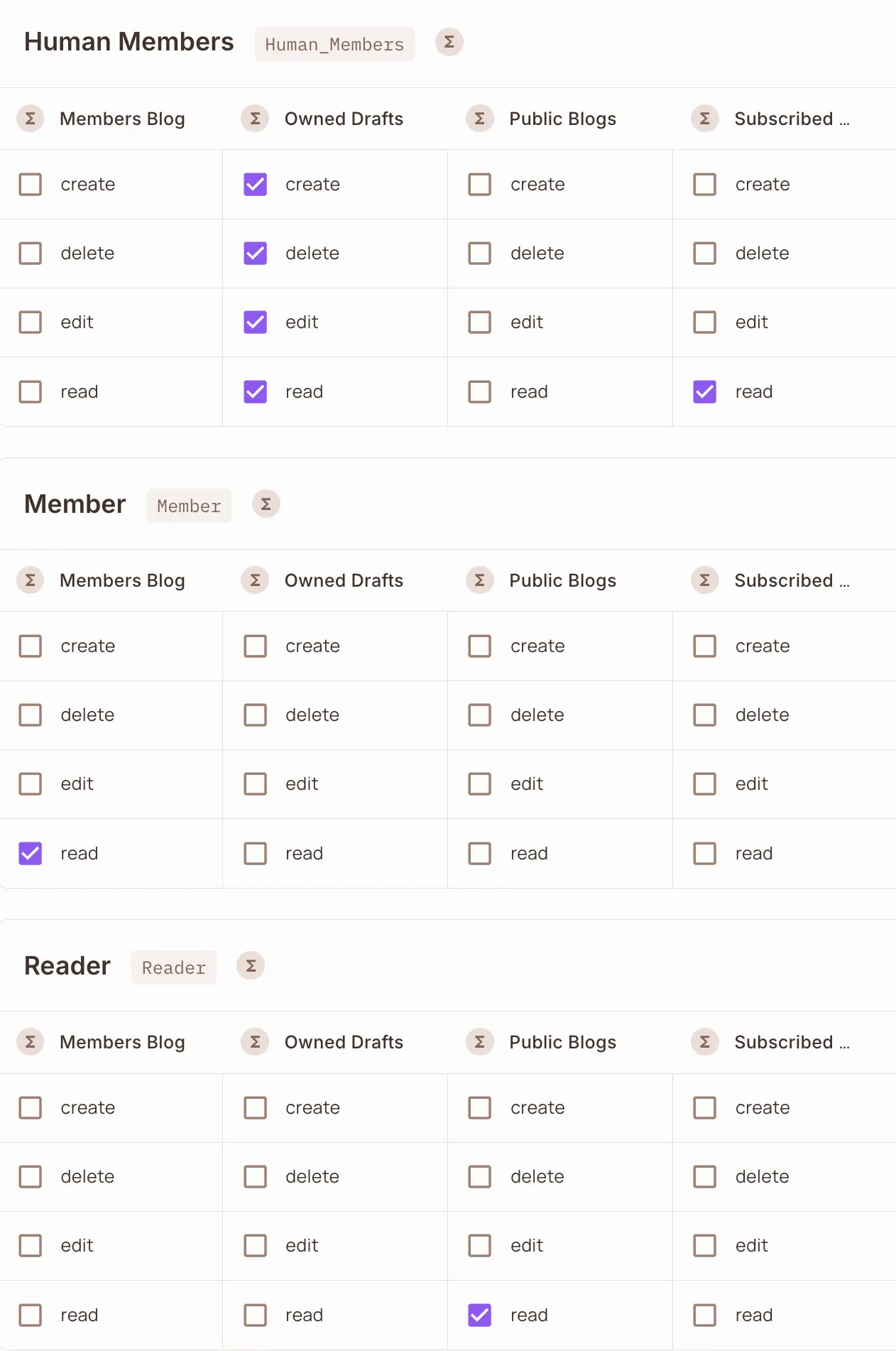

Fine-Grained Authorization policies such as Attribute-Based Access Control (ABAC) can help us with this: Both the trustworthiness of the user and the risk level of the action can be defined as attributes in our access policies.

With this approach, permissions are granted based on whether trust exceeds risk at the time of the request. This means trust is not a label assigned once during authentication—it is continuously evaluated.

Let’s return to the financial example.

We’ll implement an access control policy where:

Trust level is assigned to the user dynamically (based on authentication strength, behavioral patterns, and historical data).

Risk level is assigned to the action dynamically (based on transaction amount and contextual factors).

Access is granted only if trust outweighs risk.

Step 1: Defining Trust and Risk Scores

First, we need to establish how trust is calculated. A user’s trust score could be determined by:

Authentication strength – Password only vs. password + MFA vs. biometric verification.

Behavioral consistency – Does this request match previous behavior?

Past security incidents – Has this user triggered security alerts before?

On the other hand, risk scores are determined by the action being performed:

Low-risk (score 1) – Viewing an account balance.

Medium-risk (score 2) – Transferring up to $10,000.

High-risk (score 3) – Transferring over $10,000.

The rule is simple: if trust is greater than or equal to risk, access is granted. Otherwise, additional verification is required.

Step 2: Enforcing Policies in Code

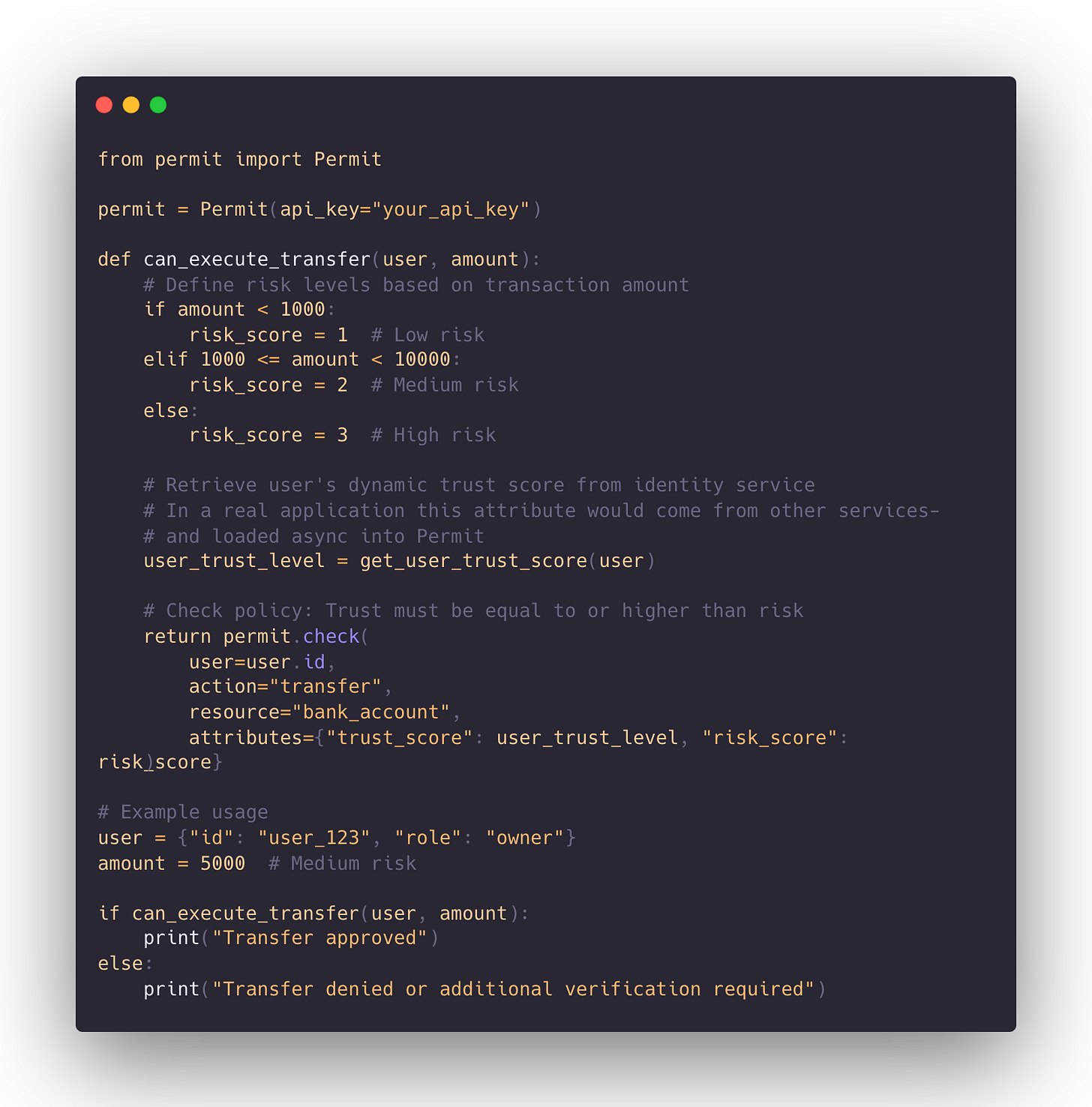

Now let’s look at how this works in practice using Permit’s SDK. The goal here is to apply an ABAC policy where trust and risk are both attributes, ensuring that trust-based access control is enforced at runtime.

This code example is obviously quite abstract, but I’m trying to convey the principle here:

This implementation shifts access control from a static role-based system to a dynamic trust-based model. Instead of making an upfront decision about whether a user is trustworthy, we continuously evaluate trustworthiness based on real-time context.

Static Labels > Dynamic Security

We are entering a world where trust can no longer be taken for granted. Identity alone is not enough to determine whether someone—or something—should be allowed access. The traditional model of assigning trust as a static attribute at the authentication stage is breaking down. Instead, trust must be earned, reinforced, and dynamically adjusted as new data emerges.

In security, this means shifting from predefined roles and permissions to real-time, adaptive access control models. Trust is not binary—it fluctuates based on behavior, context, and risk. The same user might be trusted to perform an action in one scenario but require additional verification in another.

This is especially important as AI and automation become more deeply integrated into our systems. AI agents are already making purchasing decisions, scheduling transactions, and handling sensitive data. But should an AI assistant who books flights be trusted to make financial transactions? Should an automated system that updates software settings be allowed to approve security changes? These decisions should not be hardcoded permissions—they should be evaluated dynamically based on trust scores that evolve over time.

By building access control systems that treat trust as an emergent property, we can create more resilient applications. Instead of relying on outdated assumptions, we move towards a risk-aware model that continuously evaluates who—or what—should be allowed to act.

That’s it for this week. I’d love to hear your thoughts:

How does your system currently define trust?

Does your access control model adapt to risk levels?

Would trust-based policies improve security in your applications?

Let me know in the comments!

Until next time,

Or